Dockerfile - Best Practices

One of the great benefits of using Docker as a containerization engine is that it’s thin and lightweight, leading to less overhead and overall better performance for your applications that traditional non-containerized ones (where dependencies from one part of the application could lead to bottlenecks in another part of the application, hosted on the same machine).

Another advantage that comes from the usage of Dockerfiles that are part of Docker is the fact that you have repeatable “recipes” that you can use to deploy an identical replica of the application everywhere (local environment, staging, production).

However, a badly written Dockerfile could actually result in more frustration than benefit for everyday development and – more importantly – application performance.

Here are some Dockerfile best practices and tips on how to avoid that.

Incorrect Dockerfile ordering leads to cache bust

FROM debian

COPY . /app

RUN apt-get update

RUN apt-get -y install ssh vim

When starting a new project, the above Dockerfile might look like a good idea. You have a repeatable recipe you can run again and again. One that copies your application source code to the /app folder within the container and installs SSH CLI and Vim to be used later. After the first build of the image, subsequent builds will use cache and you will be fine.

However, if you change your application source code and rebuild the container, you will notice the second step busts the cache of all subsequent steps, meaning that it will install SSH and Vim every single time you change a line of code. Not great. Here’s a better approach:

FROM debian

RUN apt-get update

RUN apt-get -y install ssh vim git

COPY . /app

No more cache-busting. Line 2 and 3 are cached and reused even while changing source code. Rule of thumb: put instructions that change rarely towards the top.

Be specific about what you copy, to avoid cache busts

Let’s check out this example:

FROM php:7.1-apache

COPY . /app

ENV APACHE_DOCUMENT_ROOT=/app/dist

RUN sed -ri -e 's!/var/www/html!${APACHE_DOCUMENT_ROOT}!g' /etc/apache2/sites-available/*.conf

RUN sed -ri -e 's!/var/www/!${APACHE_DOCUMENT_ROOT}!g' /etc/apache2/apache2.conf /etc/apache2/conf-available/*.conf

The above will start an Apache container with PHP that serves static files from the /dist directory of our source code ( e.g. we have an Angular application that, when built – generates static HTML, JS, CSS in the /dist folder).

This is okay but again it would mean that every single change to a configuration file or any metadata file would lead to cache busting and slower Docker build times.

A better option would be to copy just the “artifacts” instead of the source code:

# Copy only the ./dist folder

COPY ./dist /app/dist

Combine “apt-get update” with “apt-get install” to avoid outdated packages

FROM debian

RUN apt-get update

RUN apt-get -y install ssh vim jdk

The above may seem like a valid Dockerfile at first, but what the build process does under the hood is – it will create

separate layers for the two RUN instructions and cache them independently. Meaning that even if you add another package

to the apt-get install step, the apt-get update will never be run (unless you manually invalidate cache).

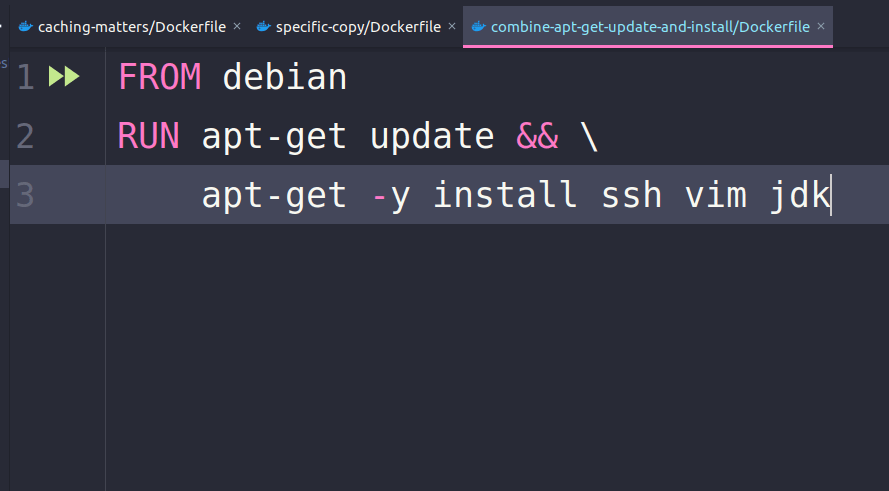

A simple solution is to combine the update and install steps, leading to one cacheable unit that will be invalidated atomically:

FROM debian

RUN apt-get update && \

apt-get -y install ssh vim jdk

Conclusion

Overall, a good Dockerfile could make a difference in your everyday development or application release experience.

Do you have any other tips that you learned from experience while working with Dockerfiles?